From

Stochastic Grammar to Bayes Network:

Probabilistic Parsing of Complex Activity

Nam N. Vo Aaron F. Bobick

Abstract

We

propose a probabilistic method for parsing a temporal sequence such

as a complex activity defined as composition of

sub-activities/actions. The temporal structure of the high-level

activity is represented by a string-length limited stochastic

context-free grammar. Given the grammar, a Bayes network, which we

term Sequential Interval Network (SIN), is generated where the

variable nodes correspond to the start and end times of component

actions. The network integrates information about the duration of

each primitive action, visual detection results for each primitive

action, and the activity's temporal structure. At any moment in time

during the activity, message passing is used to perform exact

inference yielding the posterior probabilities of the start and end

times for each different activity/action. We provide demonstrations

of this framework being applied to vision tasks such as action

prediction, classification of the high-level activities or temporal

segmentation of a test sequence; the method is also applicable in

Human Robot Interaction domain where continual prediction of human

action is needed.

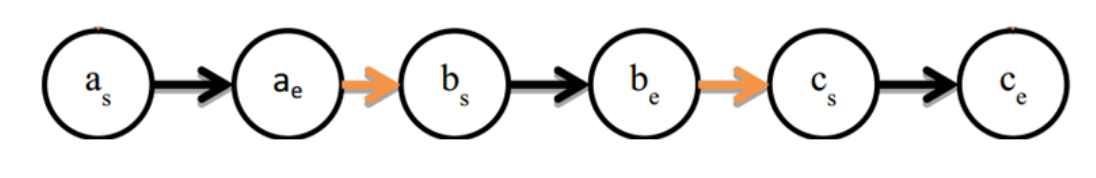

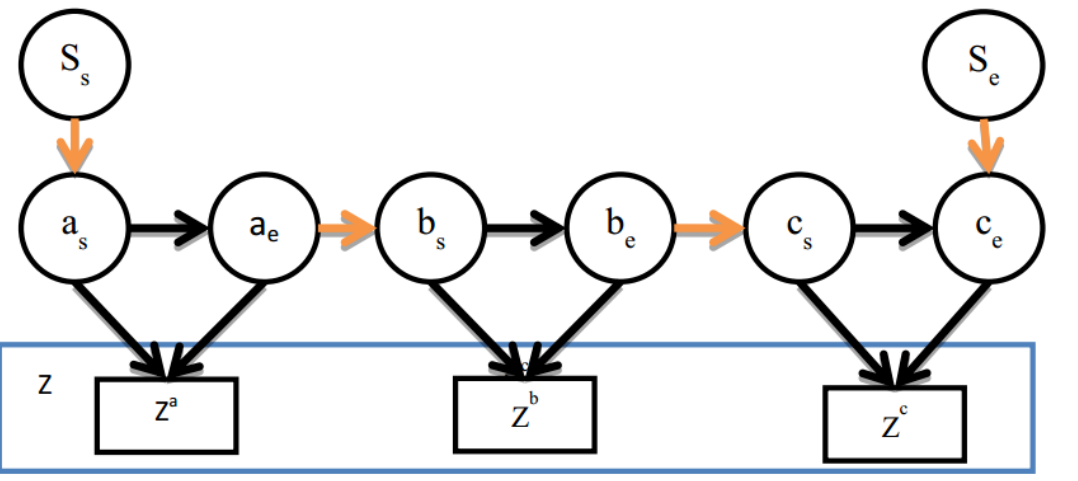

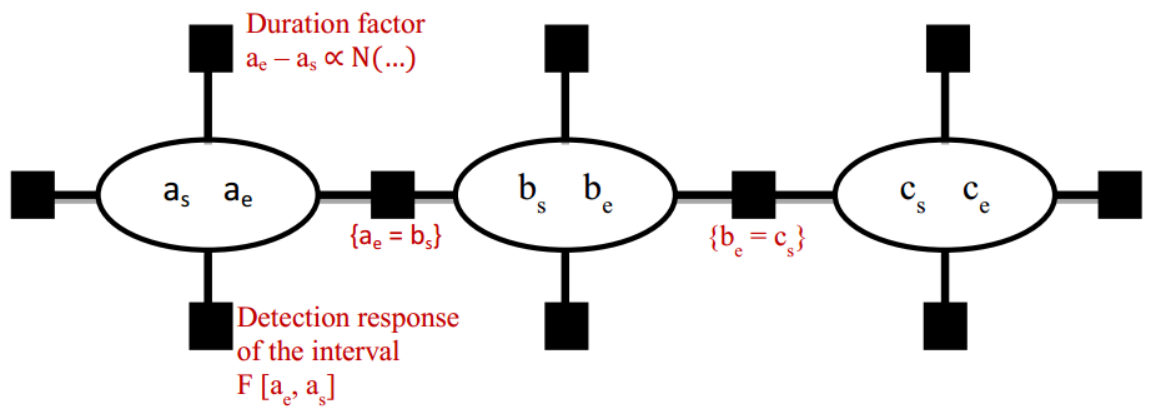

Sequential Interval Network

Different from time sliced graphical models (HMM, DBN, temporal CRF), our network models the timings of the primitive components (in our application: the actions) instead of the state of each time-step; thus allowing principle reasoning on interval level (for example: duration).To parse a simple activity S defined as a sequence of a, b, c, the following network is generated, where variables are the start & end time of each action:

Experiment Result

- Toy assembly activity: qualitative result [Supplementary Video]. Application in Human-Robot Collaboration [Video].

- Weizmann action synthetic data: segmentation accuracy 93% (state-of-the-art 88%).

- GTEA dataset: best segmentation accuracy 58% (state-of-the-art 42%).

- CMU-MMAC, making Brownie activity (ego-centric video): best segmentation accuracy 59% (state-of-the-art 32%).

Code & Data

Paper

- From Stochastic Grammar to Bayes Network: Probabilistic Parsing of

Complex Activity. Nam N. Vo and Aaron F. Bobick

CVPR 2014 [Paper] [Supplementary document] - Sequential Interval Network for Parsing Complex Structured Activity. [Journal draft]